How to protect Your Information on AWS

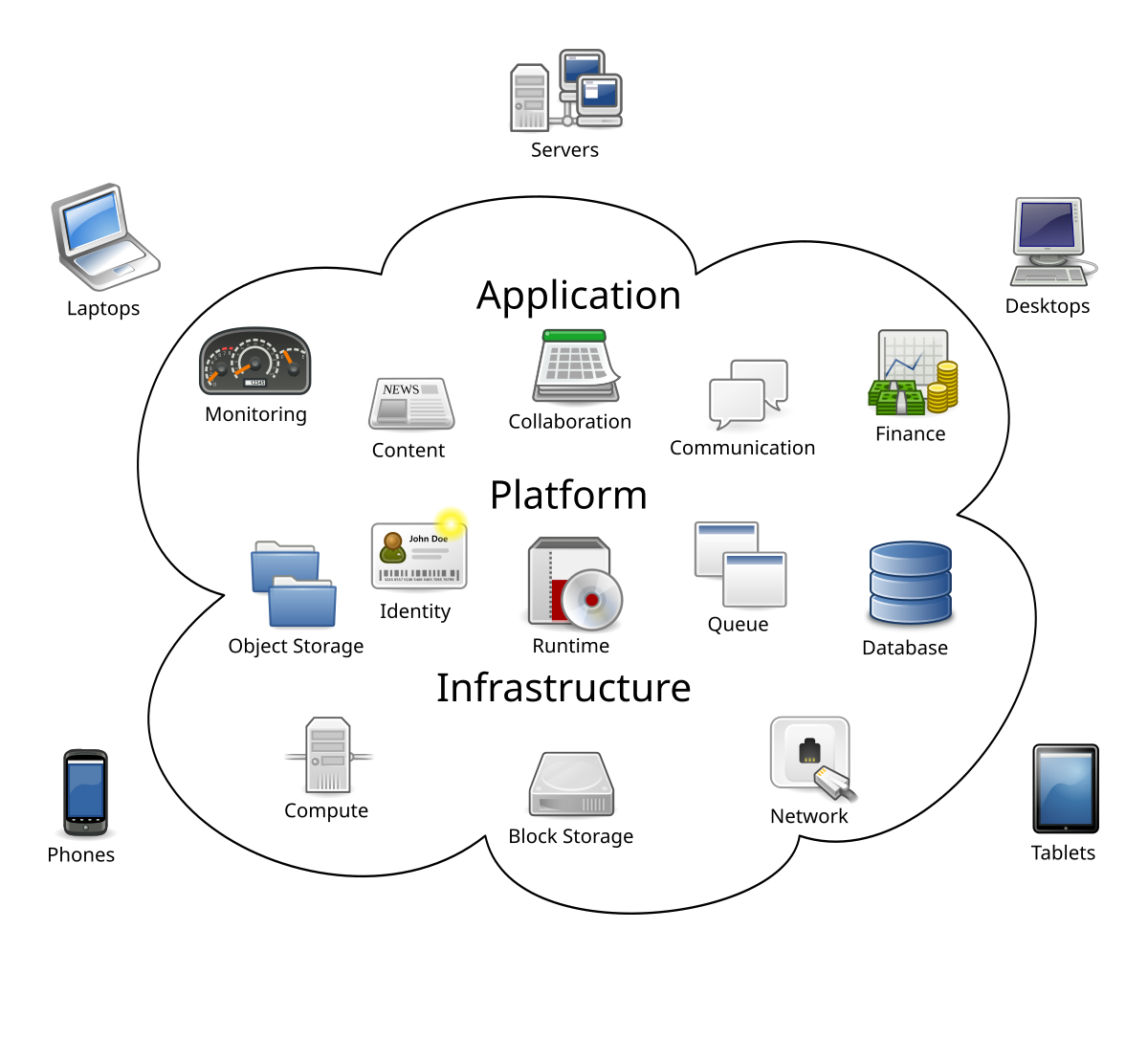

Understanding the Shared Responsibility Model

The customer, in change , is important for the safety in the cloud, i.e. the organization’s appropriate content, applications using AWS, and existence approach management, as well as its internal support like firewalls and network . For PPT Click here : Cloud Computing Training in Chandigarh .

Under this model, Deep Root Analytics was the one chargeable for the advance data liability and the indication will likely linger for a long time.

How to protect Your Information on AWS : 10 Best Practices

Enable CloudTrail opposite all AWS and direction on CloudTrail log validation :

Enabling CloudTrail confess logs to be establish , and the API call history provides connection to data like capability changes. With CloudTrail log validation on, you can consider any changes to log files after transmission to the S3 bucket .Implement CloudTrail S3 buckets connection logging :

These buckets consist of log data that CloudTrail captures. Facultative connection logging will confess you to track connection and determine potential attempts at unauthorized connection .Implement flow logging for Virtual Private Cloud (VPC) :

These flow logs confess you to monitor network traffic that crosses the VPC, active you of abnormal activity like strangely high levels of data transmission .Provision connection to groups or aspect using existence and access management (IAM) policies :

By attaching the IAM policies to groups or roles instead of particulars users, you minimize the opportunity of unintentionally giving unreasonable permissions and privileges to a user, as well as make permission-management more profitable .Decrease connection to the CloudTrail bucket logs and use most factor authentication for bucket deletion :

Unconditional connection , even to controller , expansion the opportunity of unauthorized connection in case of stolen recommendation due to a pushing intrusion . If the AWS account becomes compose , multi factor verification will make it more challenging for hackers to hide their route .Encrypt log files at rest :

Only users who have acceptance to connection the S3 buckets with the logs should have decryption acceptance in addition to connection to the CloudTrail logs.Frequently rotate IAM connection keys :

Rotate the keys and setting a standard password termination policy helps prevent connection due to a lost or stolen key.Restrict access to generally used ports :

such as SMTP , FTP, MSSQL, MongoDB etc., to compulsory entities only.Don’t use access keys with root accounts :

Doing so can simply arrangement the account and open connection to all AWS services in the event of a lost or stolen key. Generate performance based accounts instead and deflect using root user accounts altogether.Eliminate untouched keys and damage inoperative users and accounts :

Both unused connection keys and inoperative accounts expand the risk outer and the risk of arrangement .

Here we analysis few of the most common configuration misstep administrators make with AWS.

Not knowing who is in charge of security

It’s a genuine approach , but nuanced in execution, says Mark Nunnikhoven, vice president of cloud research at Trend Micro. The conspiracy is computation out which importance is which .

More useful , AWS afford a collection of services, every of which ambition distinct levels of importance ; know the disparity when picking your service. For example, EC2 puts the onus of security on you, leaving you important for configuring the operating system, managing applications, and protecting data . It’s quite a lot,Nunnikhoven says. In variation , with AWS Simple Storage Service consumer target only on secure data going in and out, as Amazon contain manage of the operating system and application .

Forgetting about logs

CloudTrail provides valuable log data, maintaining a history of all AWS API calls, including the existence of the API caller, the time of the call, the caller’s source IP address, the desire parameters, and the return elements returned by the AWS service. As such, CloudTrail can be used for security investigation , resource management, change tracking, and concession audits.

Saviynt enquiry found that CloudTrail was often deleted, and log validation was often restricted from particular instances.

Administrators cannot delightfully turn on CloudTrail. If you don’t turn it on, you’ll be blind to the activity of your virtual instances during the course of any future analysis . Some determination need to be made in order to implement CloudTrail, such as where and how to store logs, but the time spent to make sure CloudTrail is set up accurately will be well worth it.

Giving away too many privileges

Controllers often decline to set up thorough policies for a collection of user scenarios, instead selecting to make them so broad that they lose their capability . implement policies and roles to restrict connection depreciate your attack surface, as it dispose of the opportunities of the entire AWS environment being compose because a key was defined , account license were stolen, or someone on your team made a composition error.

Having powerful users and broad roles

A general most configurating is to nominate connection to the complete set of acceptance for each AWS item. If the application obligation the capability to write files to Amazon S3 and it has full access to S3, it can read, write, and delete each single file in S3 for that account. If the script’s job is to run a periodically cleanup of unused files, there is no wish to have any read acceptance , for example. Instead, use the IAM service to give the application write access to one definite bucket in S3. By analyze permissions, the application cannot read or delete any files, in or out of that bucket.